Warning: Contrarian point of view ahead. Proceed at your own risk.

We live in an era of big data. Everything we view online, every search, every purchase on Amazon is all logged and stored in databases. Remarketing—that creepy experience of seeing online ads that reflect your recent activity—is built on this vast reservoir of our personal data footprints.

From a consumer marketing perspective, leveraging big data is an attractive and powerful capability. If brick and mortar retailers can use data analytics to identify consumer behavior, predicting the best times and best product pairings for in-store end-cap displays to increase sales, then big data will have a big payback. And if online retailers can use remarketing, intention-sniffing algorithms, and keyword analysis to increase sales, big data again can show big results. For business to consumer marketing, the age of big data has been utterly transforming.

Should Museums Get on the Big Data Train?

But should museums engage in such cutting-edge data analytics and data-driven tactics to promote exhibitions, increase site traffic, or refine engagement with in-museum displays? How often should data-driven user studies be employed in decision making?

My contrarian warning betrays my opinion. For several reasons that I’ll present in this article, I think that most (not all) data collection, data analysis, and data-driven decision making is more of a burden than a benefit to museums.

I’ll qualify my big data skepticism by acknowledging that museums should track and review basic benchmark performance metrics. Reviewing on-site and online visitor metrics, ticket sales, donor activity, and so forth is as important as keeping good books and reviewing your balance sheets on a regular basis. It reflects basic good stewardship and management. And conducting occasional visitor satisfaction surveys can be helpful too. (Though these days where it seems like every purchase or interaction with customer service results in an invitation to participate in a survey—it may be best to lay off of this practice for a while.)

But too often I’ve seen museums get caught up in the rush toward data mining and analytics. User testing becomes the answer to every problem. Lots of time and effort is invested in collecting and analyzing data, and too little time is spent evaluating whether the insights gleaned from these efforts had any tangible impact on results or for decision making.

For example, at Museums and the Web last week, I sat in a session where a museum went to great lengths to measure the engagement of visitors with a few interactive displays. This included watching video footage of the gallery and manually counting how many people interacted (roughly guessing at their demographic profiles) with digital exhibits. They utilized app-tracked “dwell time” to correlate new sessions with observable use.

Based on all this data, and the analysis from it, the museum did gain an insight. Interactives with more interpretative overlays had less engagement than those with fewer. If an interactive was limited to just three interpretive overlays, users tended to view all three. But when there were five or more, they disengaged sooner. That is a valuable observation that can inform future digital exhibition design.

But at what cost? The title of the session was “Anonymous and Cheap” with respect to measuring engagement. And while the tools and methods used to collect the data had little hard cost to them, I wonder how cheap this effort really was, if you include the real monetary costs, and opportunity costs, of the time involved in the effort? If you assign a real dollar value to the staff’s time, this analysis might not be as cheap as it was purported to be. And on top of the cost of staff time, there’s also the opportunity costs. What else might staff have accomplished if their time were re-allocated to other efforts? If we factor those real but hidden costs back in, we need to ask, was it worth it?

Tellingly, the speaker concluded his session with a tongue-in-cheek xkcd comic of a UX analyst geeking out over a huge data set he’d collected. When asked what the results were, the punchline was, “We’re not sure yet, but imagine the possibilities! This has to be valuable!”

That comic betrays a real problem. And the economics of data-driven efforts should be more soberly evaluated with respect to the long-term mission of museums.

So let’s dive deeper into the trade-offs between the costs of data collection and analysis and their benefits for museums in particular. After all, museums have limited resources of both time and money, and wasting them is never in the collection’s best interest.

How Big Does Big Data Need to Be?

One major factor to consider, when it comes to the effectiveness of data-driven testing, is the size of the data pool you will be able to draw upon. If you’re Amazon.com and you’re building transactional user interfaces that will be used by millions of users, a five percent difference in task completion rates can translate into significant benefits. And so investing thousands of dollars in user studies makes all the sense in the world. But if your museum store sells $100,000 in online merchandise per year, investing thousands of dollars to lower transactional friction of your ecommerce checkout process is hardly worth the effort.

Additionally, data quantity is a significant factor in data accuracy. For pure data analytics, unless you have a sufficiently large data source, the potential for distortion in your results increases. You may have heard about “machine learning,” or its more dramatically hyped title “artificial intelligence.” But for any real value to come from machine learning you need massive data sets—millions upon millions of records. And likewise, with user studies, there is also a certain threshold of quantity of data before you can expect to get accurate results. And even if you do have a statistically relevant sample, with dependable results, that’s no guarantee that the results will be actionable. If a particular question receives a 55%-45% split, is that enough to take action on?

So my first caution for museums, before getting swept up into the trends for data testing, is to consider the sample size that you can realistically utilize. The smaller the sample size, the more you’ll need to discount the results, and thus discount the value of the effort in the first place.

A More Philosophical Consideration

Aside from the pure economics of deciding when user testing and data analysis is appropriate, museums (and anyone else considering such efforts) need to understand the progression from data to insight.

Data itself is opaque. Raw data, such as server logs, are next to useless by themselves. You need to process data before it can start to reveal helpful information. In information science, the D.I.K.W. problem describes the steps needed for data to become insight. Data needs to be interpreted and analyzed into Information. Information needs to be studied in order for it to become Knowledge. And Knowledge, while helpful, does not provide much direction, not until it is rightly understood and put into practice through Wisdom (insight).

There is often a high initial cost at the front end of this process. The costs may lower as you move from D to I to K to W, but there are also, always, diminishing returns. A data set may contain thousands or millions of records. Much will be noise. So the data will need to be sifted sorted and refined to produce smaller sets of information.

Consider Google Analytics. It acts on server access information to provide tables, graphs, segments, and stats. They process lots of raw data into information. But that information will consist of quantifiably fewer (albeit qualitatively more useful) reports than the original data used to create them. Fortunately, web stats and Google Analytics are automatic and essentially free. But not all data collection processes are free (like counting people from a video feed and manually producing stats).

But even when you are provided with great information, moving from information to knowledge is another phase of the process which will involve new efforts with further diminishing (though more valuable) returns. The effort in the case of Google Analytics may involve taking a short course to learn how to evaluate Google Analytics information into helpful bits of knowledge. With a little work you can learn how to evaluate the bounce rate of your homepage compared to the bounce of internal pages. You can discover which pages have the longest viewing durations. You can discover if your mobile visitors gravitate to certain kinds of content compared to your desktop viewers, and so forth. There is plenty of knowledge to glean from the information in Google Analytics.

But what about that last step, moving from knowledge to wisdom (or insight)?

Based on the data, and gleaning it’s information, suppose you gain the knowledge that your site has an average bounce rate of 50%. Should you freak out? Should you engage a study to find out why? Or is it normal? (It is.) Suppose you find that mobile visits engage fewer pages on average compared to desktop. Is that something you should look into fixing? Or is it normal? (It is.) The progression from Knowledge to Wisdom is what matters most. You can have all the data in the world, but you still need an analytics platform to get information out of it. And you might cultivate the skills to get knowledge out of information–but what should you do with that knowledge?

And this brings us to one of the most unrecognized limitations between data-driven decision making and experience-based decision making. There will always be a huge gulf between what is, and what ought to be. Data, information, and knowledge consists of what is. They tell us what has happened. Wisdom is knowing what you ought to do based on the knowledge you have.

The economic limitations built into seeking wisdom from big data have already been considered. But there is also a real limitation in the nature of the progression itself. You see, the reality is that wisdom or insight does not arise directly out and from the progression of data to information, or from information to knowledge. The leap from knowledge to wisdom is not automatic, or entirely derived from that knowledge. Certainly knowledge informs wisdom, but wisdom is also informed by other sources such as experience, priorities, commitments, and long-term mission. Sometimes these kinds of considerations can outweigh even the most data-supported and valid observations of user experience.

How Much Impact After All?

Finally, after running the numbers and calculating the costs of the effort needed to collect, analyze, interpret, and synthesize data into actionable information, we need to consider how much impact the outcome of these efforts really produces.

Let’s return to that digital engagement study again. There was certainly an actionable outcome from those efforts. They learned that future digital exhibits should be limited in their interpretive details. I would argue that is is also a common sense observation. When museum visitors are in “skim” mode (which almost all of them are when trying to absorb an entire museum in one visit), their available attention span for any given exhibit is likewise limited. You have to keep things short and to the point. Too much depth, and people bounce.

But the basic reality that the vast majority of museum visitors are always, and necessarily, in skim mode, means that even if you perfectly optimized a particular exhibit for maximum engagement, it’s still going to be just one interaction among hundreds, all of which are just one one-hundredths of the overall impact made on that visitor in a skim-oriented visit. At the end of the day, most museum visitors have had a lovely and edifying time, having enjoyed your galleries and exhibits they feel refreshed and perhaps have leaned a few new things from their experience. Given the overall limits of impact on skim visits, does moving the engagement needle, for just a subset of your skim visitors (those who interact with a specific installation), by 10-20 percentage points really warrant the investment that went into gaining that particular insight? And how many studies and analyses have been run that did not result in even that level of insight? Could museums spend their time, energy, and effort in more productive ways? What if the time spent on that study was relocated to docent recruitment and training? Might that investment have produced even more potential engagement? (Someone should run a study.)

My contrarian point of view on big data for museums is not a denial that helpful information can come from data collection and analysis, but rather a questioning of the degree of value of these insights have compared to their costs, especially when considering the unseen opportunity costs of possible alternative uses of such efforts.

Should You Change if Data Demands it?

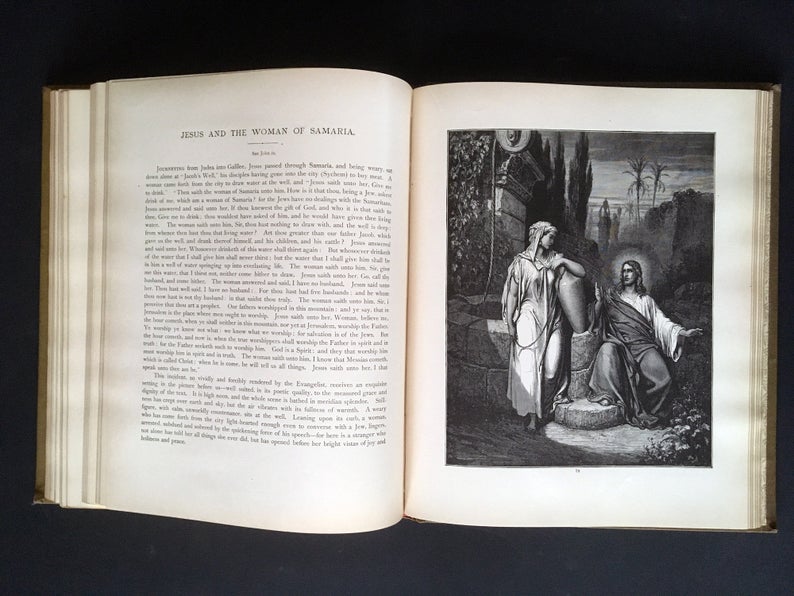

I’ll conclude with one final example of how data-driven decision making is even more questionable in a museum context. Suppose surveyed your local museum visitors, or collected wifi-hotspot data on which exhibits and galleries they visited most, and the data conclusively show that the average museum visitor prefers and engages your contemporary galleries 34 percent more than they do with the medieval tapestry and iconography galleries. And that the Asian pottery hall was the least engaged part of the museum. Would you, could you, deaccession parts of the medieval and Asian collections, and replace them with contemporary art?

Museums are collections donated in trust. There is an obligation, a fiduciary responsibility to the collection you have, not to the parts of the collection the public happens to be most interested in this decade. Besides, if you wanted to be fully data-driven, you’d move all that old stuff out and replace it with an IMAX theater, or immersive VR experiences. The data show that people enjoy those experiences much more than looking at ancient ossuaries. But there are responsibilities and principles beyond the data-driven metrics that must inform the mission of museums. If any institutions need to stand steady on mission, despite what the latest polling data suggests the public prefers right now, it should be our museum institutions.

Don’t Give In to the F.O.M.O.

So to the extent that data is readily available (like website stats, attendance records, financial information, etc.), and that you have affordable systems to process that data (like Google Analytics and CRMs like Blackbaud), and that gleaning knowledge from these sources is a part of your routine—by all means check your assumptions and be informed by your data. But if you’re considering costly studies, or feeling the pressure from the hype of big data, “machine learning,” and overactive imagining of UX possibilities, I’d advise a more cautious and steady approach to decision making. Don’t give into the Fear Of Missing Out. Keep preserving, presenting, and promoting the treasures from your collection. We all need our museums to keep us steady, and to keep us reflecting on our cultural legacies in this high speed age of big data.