After another great Museum and the Web conference, I wanted to take a minute to look back at a common phrase that ran through many of the most popular sessions: immersive storytelling. This is nothing new as Museums have long explored varying ways to grab their patrons’ attention with this type of narrative. Over the last couple years we have seen sessions popup with buzzwords like virtual reality, augmented reality, and transmedia. However, each year I leave still skeptical of these technologies and how they might promote the core missions of most museums.

Thursday’s opening plenary kicked off the conference with a bang. The speaker was Vicki Dobbs Beck from Lucasfilm’s ILMxLAB and for the next hour, we were immersed in pop culture icons like Star Wars and Indiana Jones. It was impossible for my inner child NOT to geek out with nostalgia as she described ILMxLAB’s efforts to push these familiar characters and their storylines into a new space with VR technology. For a second there, I even forgot I wasn’t actually at Comic-Con. While most of her examples in this technology were of Star Wars type hyper-reality experiences like Star Wars: Secrets of the Empire in downtown Disneyland, there was one example from a museum being LACMA‘s concept installation called CARNE y ARENA.

While these types of VR experiences were quite mesmerizing, it did present a set of jarring questions. With most museums’ severe budgetary limitations, is the jump into VR and AR worth it? If the new goal of a museum is to compete for attention in this technology, would their mission change to become something different while chasing the allure of the “shiny”? And when the vehicle for telling a story is VR, can the emphasis of the story still remain focused on the art or does it shift towards the technology itself?

For stories told through the lens of corporate pop culture, these questions don’t apply. Their mission is a bottom line and oftentimes, the “shiny” overshadow the story for just that reason. How often have you have gone to a movie to just see the CGI monster or visited a theme park to play with the newest technology?

For museums, the question might need to changes from “How could we…” to “Why should we compete at the same game with a very limited set of resources?”

However, it was refreshing to step into another set of sessions that focused on a different approach to immersive storytelling gaining momentum at this year’s Museum and the Web. Instead of chasing the “shiny”, their focus was on the art itself and the stories that supported the core mission of museums. The one thing these sessions all had in common was incorporating the Deep Zoom.

Introduction to the Deep Zoom

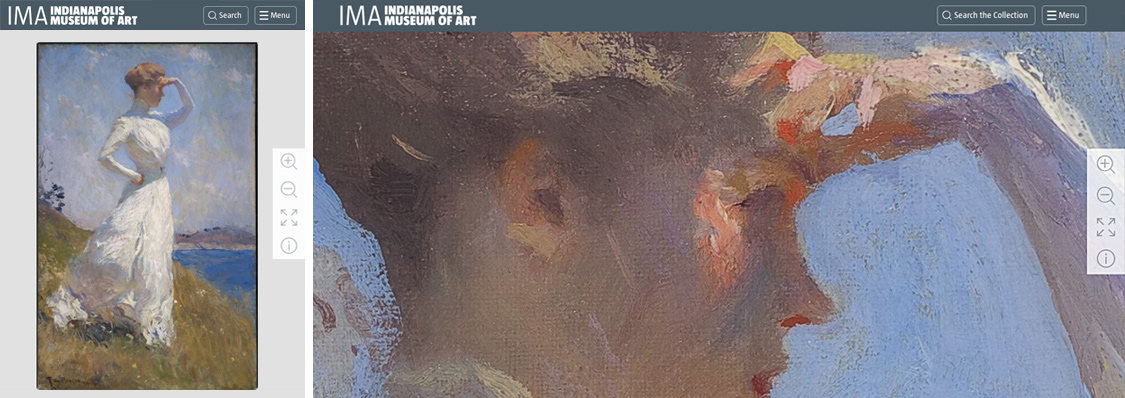

For an intro to the concept of Deep Zoom, it’s best to experience it. For example, San Francisco MoMA and Indianapolis Museum of Art are just two museums that have implemented this technology with many of their paintings to let us dive in and discover the rich details of Frank Weston Benson’s Sunlight or Wayne Thiebaud’s Flatland River. When the user initially arrives at either of these images, they might not notice anything different than the scaled down representation of the work of art minus a zoom/expand magnifying glass icon off to the side. However, upon interacting with either the image or the icon, you can zoom into your desired level and move around the painting to fill your screen with a specific area of interest and its incredibly rich detail.

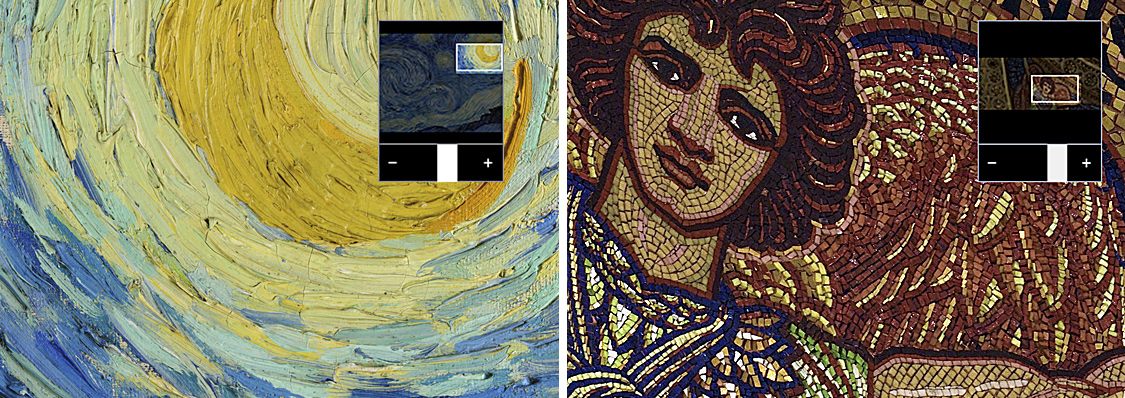

The limitations of the zoom are only constrained by the size of the photo. With some pieces, you can view the artwork at the gigapixel level where the details of a couple highlighted brushstrokes fill the screen and you are presented with the vantage point of the artist’s eye. Who wouldn’t want to see the individual brushstrokes swirls in Van Gogh’s Starry Night? Or break down the physical distance to view each of the millions of gold and glass tiles way up in the ceiling of St Paul’s Cathedral.

To note, many of the session that explored this concept of the deep zoom focused on IIIF (the International Image Interoperability Framework). While this set of specifications is used by many of the open source applications that implement the deep zoom like OpenSeadragon, for now, we will just be looking at the end product for users and how it can be utilized by museums. But stay tuned to our blog for an upcoming post on more about IIIF and OpenSeadragon.

Creating Narrative within your Art

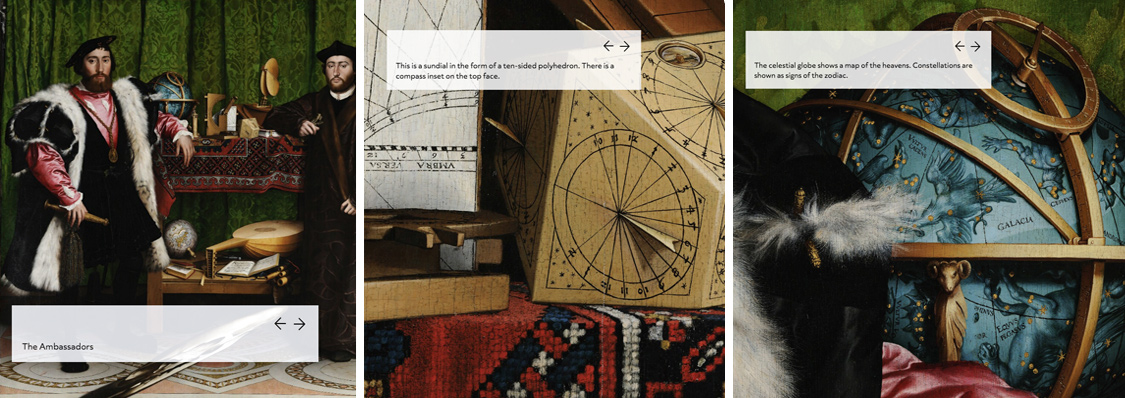

When Deep Zoom reveals specific areas of detail within a piece of art, museums and educators can begin to explore storytelling within the piece itself. By controlling the order of a set of zoomed-in areas, paired with narrative text, a story can unfold simply by scrolling or clicking through a piece of art.

CogApp’s session titled Making Metadata Into Meaning: Digital Storytelling With IIIF offered a couple examples of this approach. They even created a website titled Storiiies to showcase just how this technique can work using a variety of methods. Uncover the story inside of Hans Holbein’s The Ambassadors as the narration guides you through a selection many of the painting’s rich details. Once set up, this narrative doesn’t need to be limited to this specific interface as it can tell the same story through a series of tweets, autorun narration, or even a livestream guided demo.

This same paired narrative approach was also showcased by Google Arts & Culture and explored in their session Recent Collaborations, Experiments, And Preservation From The Google Cultural Institute. While we have discussed other implications of this session in a previous post, their use of the deep zoom is still a great example to showcase immersive storytelling through individual works of art. You can now be led through the master’s technique of light and shadow in Rembrandt’s The Night Watch. Or learn of the deep and complex meaning, panel by panel of Van Eyck’s The Ghent Altarpiece. And without the guided tour and deep zoom focus, it would almost be impossible to decipher all the details showcased in the construction of Pieter Bruegel’s The Tower of Babel.

Using Multiple Interfaces to Create a Richer Experience

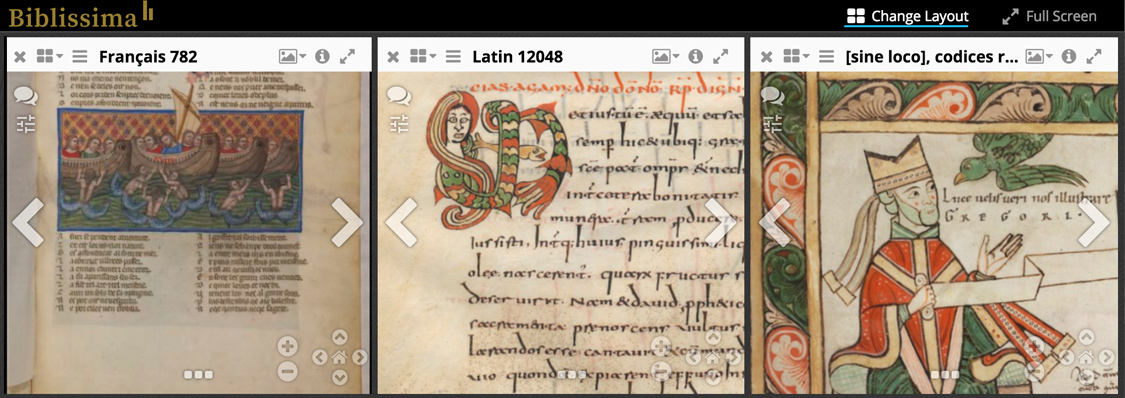

Still others at Museums and the Web showcased the potential of what can be achieved when you combine emerging open source technologies like OpenSeadragon with the platform Mirador to enhance the possibilities of the deep zoom. With Mirador, museums can now showcase multiple windows using the deep zoom so users can compare/contrast the details of varying pieces side by side. The Biblissima Project has an example of this technique.

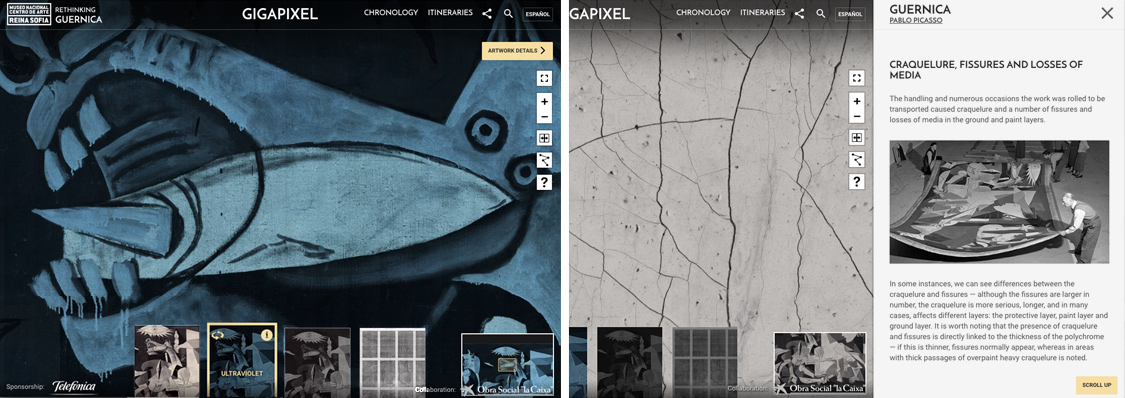

However, one of the best examples of the potential of these type features rightly earned GLAMi honors at Museum and Web. Rethinking Guernica is an amazing compilation of these techniques, offering a very deep dive into Picasso’s painting Guernica. The mini-site first presents you with a brief scrolling history of the work and its surrounding timeline before you enter into a gigapixel version of the masterpiece with matching versions in X-ray, ultraviolet, and infrared. Not only can click to reveal each of these views of the same area of the painting but you can compare two of the images side by side using a Mirador-type window set up. Entering into “Artwork Details”, the viewer is presented with a story, paired with the corresponding visuals of the piece that describe composition, techniques of the artist, and what scholars learned from the blended photographic techniques and the restoration process of the piece itself.

While museums are just beginning to test the potential of immersive storytelling using the deep zoom, it is obvious that this platform offers an effective way to explore art, tell its story, and relay vast knowledge hidden from the first glance of your collection. While it might not seem as sexy as the VR dangled in front of you with images of Darth Vader, it does provide museums a viable technology that allows them to focus on what they know best; their art and the stories that can further their museum’s mission.